Table of Contents

Introduction

In the evolving landscape of Artificial Intelligence (AI), open-source Large Language Models (LLMs) have become invaluable tools for developers, researchers, and enterprises. These models offer advanced AI capabilities for a wide range of applications, from chatbots to code generation, without the restrictions typically imposed by proprietary providers. This guide provides an in-depth comparison and analysis of the best open-source LLMs available in 2024, detailing their features, performance, and use cases.

Current Leaders in Open Source LLMs

As of 2024, several open-source LLMs have emerged as leaders in the field. Models like Mixtral, Mistral 7B, and Llama 2 are at the forefront, providing versatility and performance that rival many proprietary models. These models have become preferred choices for various applications due to their robust capabilities and open-access nature.

Top Models:

- Mixtral

- Mistral 7B

- Llama 2

Comprehensive Guide to Testing, Running, and Selecting LLMs

Selecting the right open-source LLM for your needs involves understanding the specific use case and performance requirements. The following sections provide detailed insights into how to test, run, and fine-tune these models effectively.

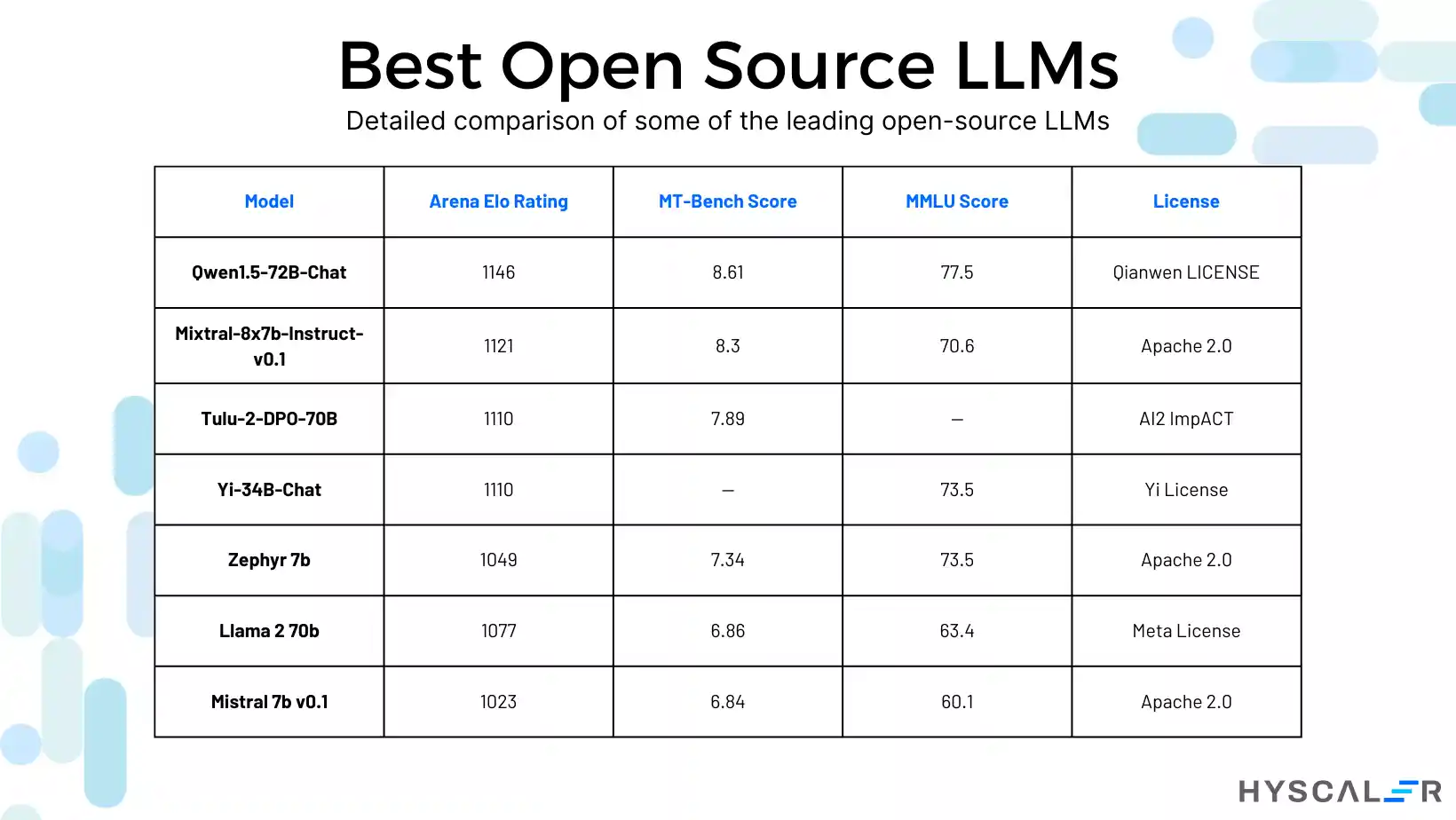

Best Open Source LLMs of 2024

Here’s a detailed comparison of some of the leading open-source LLMs:

Testing Open Source LLMs Online

Before downloading models and setting up software, testing LLMs using online platforms is beneficial. Here are a few recommended playgrounds:

Perplexity Labs

Perplexity Labs offers a chat interface and API access to open-source LLMs, including Mistral 7B, CodeLlama, and Llama 2. The platform provides a user-friendly interface to evaluate these models’ capabilities without the need for extensive setup.

Vercel AI Playground

Vercel AI Playground supports model inference from various models like Bloom, Llama 2, Flan T5, and GPT Neo-X 20B. This playground is ideal for quick testing and comparison of different models’ performance.

Running Open Source LLMs Locally

Running LLMs locally allows for better understanding and control over their capabilities and limitations. Here are tools and steps to run popular LLMs on your machine:

Ollama

Ollama supports running various AI models locally on macOS and Linux. It simplifies the process with commands like ollama run codellama. This tool is particularly useful for developers who want to test and use LLMs without relying on external servers.

Installation:

- Download Ollama from the official website.

- Install it using the command:

curl https://ollama.ai/install.sh | sh.

Running Models:

- Use the command:

ollama run <model-name>, e.g., ollama run codellama.

LM Studio

LM Studio is a desktop application for discovering, downloading, and running local and open-source LLMs on your laptop. It provides an easy-to-use interface for experimenting with LLMs without the need for command-line setup.

Steps to Get Started:

- Visit the LM Studio website and download the application for your operating system.

- Install LM Studio on your laptop following the provided instructions.

- Launch LM Studio to discover and download various open-source LLMs.

- Use LM Studio’s interface to run the model locally on your laptop.

Jan

Jan offers offline operation and customization, making it a cost-effective tool for running LLMs locally without monthly subscriptions. It ensures data privacy and security by operating entirely on your hardware.

Getting Started:

- Download and install Jan from the official website.

- Set up the necessary tokens and configure the environment.

- Use Jan to run LLMs directly on your desktop.

Running Open Source LLMs in Production

Deploying LLMs in production involves considerations of scalability, reliability, and cost. Here are some platforms and methods to effectively run open-source LLMs in a production setting:

PPLX API

Perplexity Labs’ PPLX API provides fast and efficient access to LLMs, supporting models like Mistral 7B and Llama 2. This API is ideal for integrating LLM capabilities into applications with minimal overhead.

Steps to Get Started:

- Generate an API key from the Perplexity Account Settings Page.

- Send the API key as a bearer token in the Authorization header with each API request.

- Use the provided API endpoints to interact with the models.

Cloudflare Workers AI

Cloudflare Workers AI allows running machine learning models on Cloudflare’s global network, providing low-latency inference. This platform supports a variety of open-source models and offers a scalable infrastructure for production deployment.

Steps to Use Cloudflare Workers AI:

- Choose an open-source LLM suitable for your application.

- Set up a Cloudflare Workers project and configure it for your model.

- Deploy your application using Cloudflare Workers or Pages.

- Monitor and optimize the application’s performance based on user feedback and cost considerations.

Replicate

Replicate simplifies deploying machine learning models with a few lines of code, scaling automatically based on traffic. It offers a Python library and a scalable API server for running LLMs.

Steps to Deploy Using Replicate:

- Create a Replicate account and obtain an API token.

- Install the Replicate Python client using

pip install replicate. - Define your model with Cog and deploy it using Replicate.

- Use the Replicate API to interact with your model.

Fine-Tuning Open Source LLMs

Fine-tuning allows customization of LLMs for specific tasks, enhancing their performance and relevance to particular use cases. Here’s how to fine-tune open-source LLMs using various platforms:

Modal

Modal enables cost-effective multi-GPU training, making it easy to fine-tune LLMs on private data with on-demand cloud resources. This platform is ideal for organizations looking to optimize models for their specific needs.

Steps to Fine-Tune with Modal:

- Create a Modal account and set up the environment.

- Install Modal and configure the necessary tokens.

- Use the provided scripts and commands to launch fine-tuning jobs.

- Monitor and adjust the training process based on performance metrics.

Together.ai

Together.ai offers tools for dataset preparation and fine-tuning, making it straightforward to tailor LLMs to specific needs. This platform is user-friendly and supports a variety of models and fine-tuning techniques.

Steps to Fine-Tune with Together.ai:

- Prepare the dataset and upload it to Together.ai.

- Initiate the fine-tuning process using the provided commands.

- Monitor the fine-tuning process and evaluate the model’s performance.

- Use the fine-tuned model in production or for further development.

SkyPilot

SkyPilot supports fine-tuning on-spot instances with automatic recovery, providing an efficient way to train models in the cloud. This platform is suitable for large-scale fine-tuning tasks that require significant computational resources.

Steps to Fine-Tune with SkyPilot:

- Set up the environment and configure cloud credentials.

- Use the provided scripts to launch fine-tuning jobs.

- Monitor the training process and adjust parameters as needed.

- Deploy the fine-tuned model using SkyPilot’s deployment tools.

Top Open Source LLMs (Large Language Models) for Small Businesses in 2024

Open-source large language models (LLMs) are transforming the landscape of AI applications, offering powerful tools that are both cost-effective and highly adaptable. For small businesses looking to leverage AI for various tasks, these models provide significant benefits. Here are the top open-source LLMs for small businesses in 2024:

- Falcon180B: Renowned for its high speed and efficient resource consumption, Falcon180B is ideal for applications requiring rapid processing and minimal overhead. This model excels in environments where performance and efficiency are crucial.

- Llama 2: With its 70 billion parameters, Llama 2 stands out as a robust model for natural language processing (NLP) and text generation tasks. Its extensive parameter count allows for nuanced understanding and generation of text, making it suitable for complex applications.

- Mixtral AI: Mixtral AI is celebrated for its high scalability and adaptability. It’s particularly effective in a range of language processing tasks, offering small businesses the flexibility to tailor the model to their specific needs.

- Mistral 7B: This transformer model features advanced attention mechanisms, making it highly effective for intricate text analysis. Mistral 7B is ideal for businesses that require detailed text comprehension and generation capabilities.

- Smaug-72B: Designed for general assistant tasks, Smaug-72B is adept at understanding and generating text. Its versatility makes it a valuable tool for creating interactive chatbots and automating customer service operations.

Benefits of Using Open Source LLMs for Small Businesses

- Customization: Open-source models allow for extensive customization, enabling businesses to modify and adapt the models to meet specific industry requirements and operational needs.

- Cost Savings: Without the burden of licensing fees, open-source LLMs present a cost-effective solution, allowing small businesses to deploy advanced AI capabilities without significant financial investment.

- Data Security and Privacy: Running these models on internal infrastructure ensures greater control over data security and privacy, critical for businesses handling sensitive information.

- Transparency: The open-source nature of these models means that their code is fully accessible, allowing businesses to thoroughly validate and inspect their functionality, ensuring reliability and trustworthiness.

- Rapid Iteration and Experimentation: Open-source LLMs facilitate quick testing and iteration of changes, enabling businesses to experiment with new features and improvements without long delays or additional costs.

Applications for Open Source LLMs

These models are highly versatile and can be employed in a wide range of applications:

- Content Generation: Creating, editing, and translating text content for blogs, websites, and marketing materials.

- Smart Chatbots: Developing intelligent chatbots that can handle customer inquiries, provide support, and enhance user engagement.

- Research: Conducting in-depth research and analysis by processing large volumes of text data to extract meaningful insights.

Conclusion

The landscape of open-source LLMs in 2024 is rich with innovation and potential. Models like Mixtral, Mistral 7B, and Llama 2 lead the way in providing robust AI capabilities without the constraints of proprietary systems. By leveraging these open-source tools, businesses and developers can drive significant advancements in AI applications, ensuring flexibility, scalability, and cost-effectiveness. Embrace the power of open-source LLMs to elevate your projects and stay ahead in the ever-evolving field of AI.

In summary, whether you are looking to experiment with new models, run LLMs locally for development, deploy them in production environments, or fine-tune them for specific applications, the open-source LLM ecosystem offers a wealth of options to explore. With continuous advancements and community contributions, these models are set to revolutionize the way we approach AI and machine learning, making sophisticated AI accessible to all.