Table of Contents

Docker Swarm and Kubernetes represent pivotal instruments in facilitating the orchestration of container deployments within distributed environments. They each empower the provision of high availability for workloads by enabling the scaling of container replicas across clusters of physical computing nodes.

In this comparative analysis, we shall delve into the characteristics of these container orchestration tools and elucidate their distinctions. This exploration is designed to assist you in selecting the most appropriate tool for your forthcoming application deployment. Let us embark on a comparison between Docker Swarm and Kubernetes!

What is Docker Swarm?

It is a built-in clustering and orchestration tool that allows you to manage a cluster of Docker engines as a single virtual entity. It transforms a pool of Docker hosts into a single large virtual machine. This enables you to scale applications and services with ease.

Standard Docker commands are designed to operate with a single container at a time. The execution of a Docker run command results in the creation of a solitary container on the host machine. However, It introduces the capability to initiate multiple container replicas, which are then distributed across a collection of Docker hosts within the Swarm cluster.

The Swarm controller oversees both the hosts and the Docker containers, ensuring that the desired quantity of healthy replicas is maintained.

What is Kubernetes?

It is an open-source container orchestration platform designed to automate the deployment, scaling, and management of containerized applications. Initially developed by Google, Kubernetes is now maintained by the Cloud Native Computing Foundation (CNCF).

It is meticulously crafted to address the myriad challenges inherent in cloud-native container operations. In addition to the fundamental aspects of container replication and scaling, it offers comprehensive solutions for managing persistent storage, configuring services, handling secrets, and orchestrating job processing.

Furthermore, it boasts extensibility, accommodating custom resource types and application-specific automated operators.

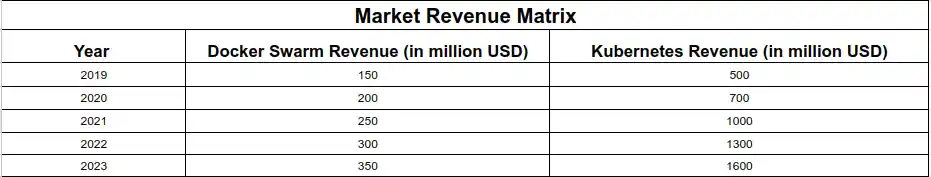

Recent Market Revenue Matrix:

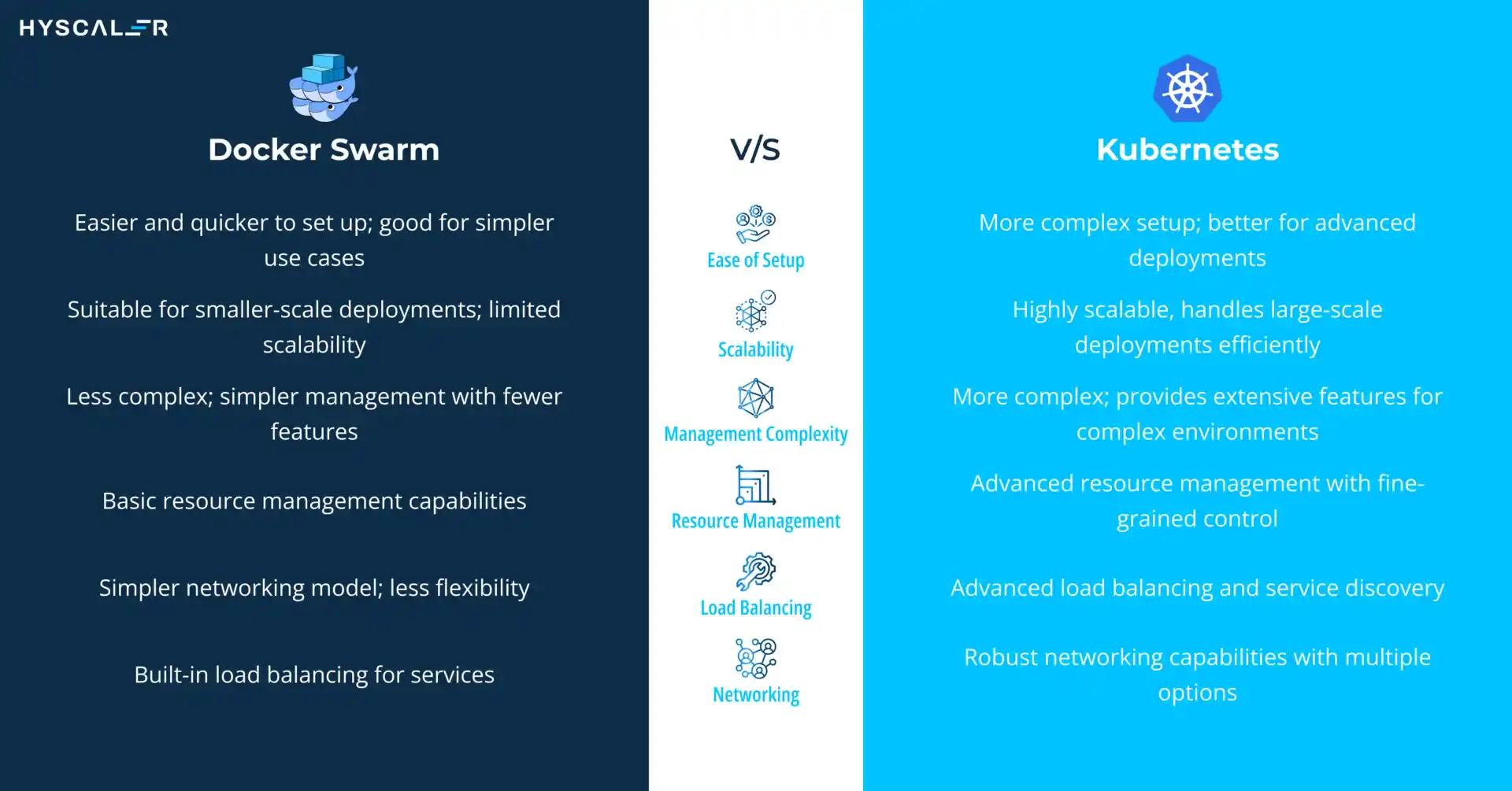

Differences Between Docker Swarm vs Kubernetes:

Use Cases Summary

- Docker Swarm: Best for simpler applications with less complexity, small to medium-sized deployments, and development or testing environments where ease of setup and minimal overhead are desired.

- Kubernetes: Suitable for complex, large-scale applications requiring robust scalability, high availability, detailed resource management, and advanced networking capabilities. It is well-suited for production environments and multi-cloud or hybrid cloud setups.

Key Features of Docker Swarm:

Cluster Management Integrated with Docker Engine: Docker Swarm is seamlessly integrated with the Docker manager, making it easy to use with existing Docker tools and workflows. Docker Swarm is seamlessly integrated with Docker, making it easy to use with existing Docker tools and workflows. This integration simplifies the management of clusters of Docker nodes, enabling the orchestration of services without additional dependencies.

Declarative Service Model: Docker Swarm uses a declarative approach to define services. Users specify the desired state of the services, and Docker Swarm takes care of maintaining that state, ensuring that the specified number of replicas are running, and automatically restarting containers in case of failures.

Scalability: Docker Swarm allows users to scale services up or down with a single command. The orchestrator automatically adjusts the number of running containers to match the specified state, distributing workloads efficiently across the cluster.

Load Balancing: Swarm provides built-in load balancing, distributing incoming requests across the available nodes in the cluster. This ensures even distribution of workloads and optimizes resource utilization.

Service Discovery: Docker Swarm has built-in service discovery mechanisms. Each service within a Swarm cluster can be accessed by a unique DNS name, making it easy to connect different services without hardcoding IP addresses.

Rolling Updates: Swarm supports rolling updates for services, allowing users to update applications with zero downtime. It sequentially updates tasks, monitoring their health and ensuring that the desired state is maintained throughout the process.

Fault Tolerance: Docker Swarm ensures high availability by replicating services across multiple nodes. If a node fails, Swarm automatically reschedules the affected tasks on other healthy nodes, maintaining the desired state and minimizing downtime.

Security: Docker Swarm offers security features like mutual TLS for secure node communication, role-based access control, and secrets management. This ensures that data is protected and only authorized users can manage the cluster.

Easy Deployment: With Docker Compose files, users can define multi-container applications and deploy them to a Swarm cluster with a single command. This simplifies the deployment process and enables consistent environments across development, testing, and production.

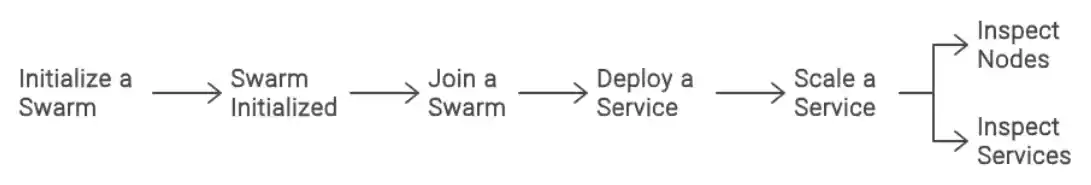

Docker Swarm Basic Commands & Operations:

This document provides a flow diagram for basic Docker Swarm commands and operations. It is a clustering and scheduling tool for Docker containers. It allows you to manage a cluster of Docker nodes as a single virtual system. The commands covered include initializing a swarm, joining a swarm, deploying a service, scaling a service, and inspecting the swarm.

Flow Chart

Initialize a Swarm:**Initialize a Swarm:**This command initializes a new swarm, setting up the current node as the manager.

Join a Swarm:**Join a Swarm:**This command allows a node to join an existing swarm using the provided token and manager IP address.

Deploy a Service:**Deploy a Service:**This command deploys a new service to the swarm, specifying the service name, number of replicas, and the Docker image to use.

Scale a Service:**Scale a Service:**This command scales the specified service to the desired number of replicas.

Inspect the Swarm:**Inspect the Swarm:**These commands list the nodes in the swarm and the services running in the swarm, respectively.

By following these commands and operations, you can effectively manage a Docker Swarm cluster, deploy and scale services, and inspect the state of the swarm.

Key Features of Kubernetes:

Automated Deployment and Scaling: It automates the deployment of containerized applications and their scaling based on resource usage or custom metrics, ensuring optimal performance and resource utilization.

Self-Healing: It automatically restarts failed containers, replaces and reschedules containers when nodes die, and kills containers that don’t respond to user-defined health checks, ensuring that the desired state of the application is maintained.

Service Discovery and Load Balancing: It provides built-in service discovery and load balancing, allowing containers to find and communicate with each other without complex configuration.

Storage Orchestration: It can automatically mount the storage system of your choice, such as local storage, public cloud providers, or network storage systems, to persist data for stateful applications.

Secret and Configuration Management: It allows you to manage sensitive information, such as passwords and API keys, and application configuration separately from the application code, enhancing security and flexibility.

Declarative Configuration: It uses declarative configurations to manage applications and infrastructure. Users define the desired state in configuration files (usually YAML), and Kubernetes works to maintain that state.

Extensibility: It is highly extensible, with a rich ecosystem of plugins and extensions, including custom controllers and operators, to meet diverse application needs.

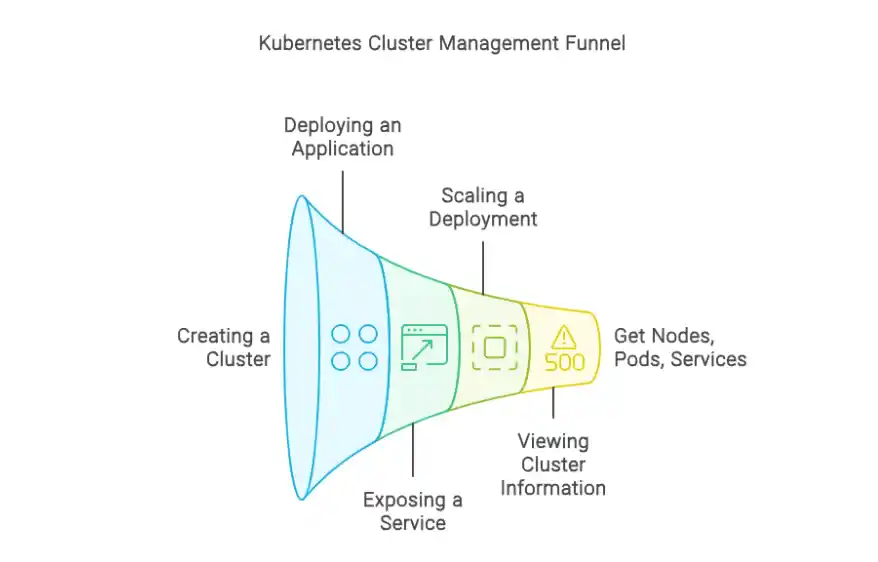

Kubernetes Basic Commands & Operations:

This document provides a visual representation of the basic commands and operations for managing a Kubernetes cluster. The flow chart outlines the steps for creating a cluster, deploying an application, exposing a service, scaling a deployment, and viewing cluster information.

Flow Chart

Creating a Cluster

kubectl create cluster

Deploying an Application

kubectl create deployment nginx –image=nginx

Exposing a Service

kubectl expose deployment nginx –port=80 –type=LoadBalancer

Scaling a Deployment

kubectl scale deployment nginx –replicas=3

Viewing Cluster Information

kubectl get nodes

kubectl get pods

kubectl get services

This flow chart and the associated commands provide a quick reference for performing essential Kubernetes operations.

Benefits of Using Kubernetes with Docker:

Enhanced Orchestration:

- Container Management: It provides powerful orchestration for managing Docker containers, automating deployment, scaling, and operation of containerized applications.

- Automated Scheduling: It schedules containers across a cluster of machines based on resource requirements and constraints, optimizing resource usage and load balancing.

Scalability:

- Horizontal Scaling: It supports horizontal scaling of applications, allowing you to easily increase or decrease the number of container instances based on demand.

- Cluster Autoscaling: Automatically adjusts the number of nodes in your cluster based on resource usage and workload.

High Availability:

- Self-Healing: It ensures high availability by automatically replacing failed containers and redistributing workloads.

- Rolling Updates: Allows for seamless rolling updates to applications with minimal downtime and automated rollback if needed.

Service Discovery and Load Balancing:

- Built-in Service Discovery: It provides automatic service discovery and DNS resolution for containers, making it easy for applications to find and communicate with each other.

- Load Balancing: Distributes incoming network traffic across multiple containers to ensure even load distribution and improve application reliability.

Resource Optimization:

- Efficient Resource Utilization: It optimizes the allocation of resources (CPU, memory) for containers, reducing wastage and improving efficiency.

- Custom Resource Limits: Allows setting resource requests and limits for containers to ensure fair allocation and prevent resource contention.

Configuration Management:

- ConfigMaps and Secrets: It provides mechanisms to manage configuration data and sensitive information (such as passwords) separately from application code, improving security and manageability.

- Declarative Configuration: Uses declarative configuration files to manage the state of applications and infrastructure, enabling version control and reproducibility.

Multi-Cloud and Hybrid Cloud Support:

- Cloud-Agnostic: It can run on various cloud providers (e.g., AWS, Azure, Google Cloud) and on-premises, allowing for flexible deployment across multiple environments.

- Hybrid Deployments: Facilitates hybrid cloud strategies by enabling workloads to be distributed across public and private clouds.

Extensibility and Ecosystem:

- Rich Ecosystem: It has a large ecosystem of tools and extensions, including monitoring (e.g., Prometheus), logging (e.g., ELK stack), and CI/CD integrations (e.g., Jenkins).

- Custom Resources and Operators: Allows defining custom resource types and operators to extend its functionality for specific needs.

Improved Development Workflow:

- Continuous Integration and Deployment (CI/CD): It integrates well with CI/CD pipelines, enabling automated testing, deployment, and scaling of applications.

- DevOps Practices: Supports modern DevOps practices by providing automation and consistency in application deployment and management.

Security:

- Pod Security Policies: It offers various security features like Pod Security Policies, Role-Based Access Control (RBAC), and network policies to enforce security measures and control access.

- Namespace Isolation: Provides isolation between different applications and environments using Kubernetes namespaces.

Why is Docker Swarm Less Suitable for Complex Applications Compared to Kubernetes?

While Docker and Kubernetes are both powerful container orchestration tools, Kubernetes is often the preferred choice for managing complex applications. Here are the reasons why Docker might not be the best fit for more complex:

Feature Set and Flexibility: It provides a richer feature set and greater flexibility, making it better for intricate orchestration and complex applications.

Scalability: It handles large-scale deployments more effectively, supporting thousands of nodes and containers with better performance.

Stateful Applications: It has robust support for stateful applications through StatefulSets and advanced storage orchestration, unlike Docker Swarm.

Networking: It offers a more powerful networking model with features like network policies and service meshes, essential for complex networking requirements.

Ecosystem and Community Support: It has a larger ecosystem with extensive community support and third-party tools, providing better solutions for complex needs.

Deployment and Management: It includes advanced tools like Helm and Operators, enhancing deployment, monitoring, and management of complex applications.

Security: It has advanced security features, including RBAC and network policies, crucial for maintaining security in multi-tenant environments.

Configuration Management: It offers ConfigMaps and Secrets for flexible and secure configuration management, unlike Docker Swarm’s basic support.

Job and Batch Processing: It supports job and batch processing natively, essential for applications requiring these capabilities.

Examples:

Gluu, a provider of open-source identity and access management solutions, employs Docker Swarm for its development and testing environments. The ease of use associated with Docker Swarm enables its developers to efficiently establish and dismantle clusters for testing activities.

Benefit: This approach minimizes configuration errors and enhances productivity by streamlining the creation of uniform development environments. Microservices Architecture.

OpenROV, a firm focused on underwater drone technology, employs Docker Swarm to oversee the deployment of containerized applications on edge devices. These devices are responsible for processing sensor data collected from distant underwater sites.

Benefit: The advantage of this approach lies in its lightweight and efficient nature, making it ideal for environments with limited resources. Additionally, it supports Continuous Integration and Continuous Deployment (CI/CD) practices.

Airbnb employs Kubernetes to oversee its microservices architecture, facilitating service discovery, load balancing, and automated rollouts and rollbacks for its worldwide platform.

Benefit: This approach provides strong support for intricate microservices, ensuring high availability and fault tolerance, thereby enabling scalable web applications.