Table of Contents

Fusing LiDAR data, which provides precise depth information, with camera images, which capture rich color information, creates a more comprehensive understanding of the environment. This fusion enables enhanced perception for applications like autonomous driving, robotics, and 3D mapping.

In this article, we will walk through the step-by-step process of fusing LiDAR data with camera images, covering both camera calibration and LiDAR-camera extrinsic calibration.

1. Camera Calibration

Camera calibration is essential for estimating the intrinsic parameters of the camera, which allows us to map 2D image coordinates to 3D camera coordinates accurately.

Proper calibration ensures that the camera image can be rectified and aligned with other data sources like LiDAR.

Steps for Camera Calibration

Step 1: Select a Calibration Target

Choose a well-defined target for calibration, such as a checkerboard pattern or a specialized calibration grid. The target should have known geometric properties and be placed within the camera’s field of view during image capture.

Step 2: Capture Calibration Images

Take multiple images of the calibration target at different positions and orientations. Ensure that the target is visible in various parts of the image frame and from different angles to help with accurate calibration.

Step 3: Extract Features from Images

Use feature detection algorithms, such as corner detectors or Harris corners, to extract features from the calibration images. These features, typically the corners of the calibration target, act as reference points for calibration.

Step 4: Match Features Across Images

Match the extracted features across the multiple images. These correspondences will be used to estimate the camera’s intrinsic parameters.

Step 5: Apply a Calibration Algorithm

Apply a camera calibration algorithm, such as Zhang’s method or Tsai’s method, to estimate the intrinsic parameters. These algorithms use the feature correspondences to solve for the focal length, principal point, and lens distortion coefficients.

Step 6: Optimize Calibration Results

Once the intrinsic parameters are estimated, perform optimization (e.g., nonlinear least squares optimization) to refine the calibration results. The goal is to minimize the error between the observed features and the projected features from the camera’s model.

Step 7: Final Intrinsic Parameter Estimation

The outcome of the camera calibration process is a set of intrinsic parameters, including the focal length, principal point, and distortion coefficients, which are essential for accurately rectifying and processing camera images.

2. LiDAR-Camera Extrinsic Calibration

LiDAR-camera extrinsic calibration involves determining the transformation between the LiDAR sensor and the camera, which is essential for aligning their respective coordinate systems. The extrinsic parameters describe the relative position and orientation between the two sensors, enabling accurate fusion of their data.

Steps for LiDAR-Camera Extrinsic Calibration

Step 1: Data Acquisition

Synchronize the data acquisition from both the sensor and the camera. Ensure that the data is timestamped correctly so that corresponding points from both sources align in time.

Step 2: Include a Calibration Target in the Scene

Place a calibration target with known geometry (e.g., a checkerboard or a 3D object with known dimensions) in the scene. The target should be visible from both the camera and the sensor’s perspectives.

Step 3: Feature Extraction

Extract features from the calibration target in both the point cloud and the camera images. For the camera, these could be corners or edges, and for the LiDAR, these might be points that correspond to those features.

Step 4: Feature Matching

Match the extracted features between the camera images and the point cloud. The goal is to establish correspondences between the 2D points (from the camera) and the 3D points.

Step 5: Pose Estimation

Using the matched features, estimate the transformation between the sensor and the camera. This step involves calculating the rotation and translation (extrinsic parameters) that map the coordinate system to the camera’s coordinate system.

Step 6: Optimization

Optimize the pose estimation by minimizing the reprojection error. The reprojection error is the difference between the observed feature correspondences and the transformed features based on the estimated pose.

Step 7: Final Extrinsic Parameters

After optimization, the output is the final transformation matrix, which consists of the rotation and translation components that describe the relative pose between the sensor and the camera.

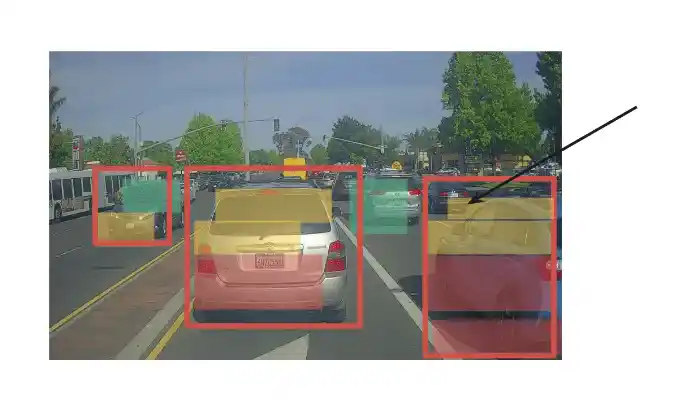

3. Coordinate Alignment and Fusion

Both the intrinsic and extrinsic parameters, the next step is to align the data with the camera images. This involves projecting the 3D points onto the 2D image plane using the camera’s projection matrix.

Steps for Coordinate Alignment and Fusion

Step 1: Apply Camera Intrinsic Parameters

Use the camera’s intrinsic parameters (focal length, principal point, etc.) to project the 3D points onto the 2D image plane. This step transforms the 3D depth data into a 2D image frame.

Step 2: Apply LiDAR-Camera Extrinsic Parameters

Use the extrinsic parameters (rotation and translation) to align the data with the camera’s coordinate system. This transforms the data into the same coordinate system as the camera images.

Step 3: Fuse the Data

Integrate the camera’s color data with the depth information provided by the LiDAR. This fusion allows for a richer, more accurate representation of the environment, where it provides depth and camera images provide texture and color.

Step 4: Visualize the Results

Visualize the fused data by rendering it into a 3D environment or overlaying the depth information onto the camera image. This helps to gain insights into the scene’s geometry and object relationships.

Applications of LiDAR-Camera Fusion

Fusing LiDAR and camera data is essential in various advanced applications, such as:

- Autonomous Driving: The fusion enhances vehicle perception, helping to identify and avoid obstacles, pedestrians, and other road users.

- Robotics: Robots can better interact with their environment by combining depth data (LiDAR) and visual data (camera), improving tasks like object recognition and manipulation.

- Augmented Reality (AR): The fusion improves AR experiences by providing accurate depth and color information for better scene understanding and realistic interaction.

Conclusion

Fusing LiDAR data with camera images significantly enhances environmental perception by combining depth information with color details.

Through precise camera calibration, LiDAR-camera extrinsic calibration, and coordinate alignment, we can create a more accurate and comprehensive representation of the world.

This powerful fusion has transformative applications across autonomous driving, robotics, and augmented reality, driving innovation and improving decision-making.

READ MORE:

JavaScript Event Loop: 5 Powerful Insights for Effortless Asynchronous Programming

Digital HR Transformation: Unlocking 7 Key Benefits Today